Bagged trees#

# When using JupyterLite, uncomment and install the `skrub` package.

%pip install skrub

import matplotlib.pyplot as plt

import skrub

skrub.patch_display() # makes nice display for pandas tables

/home/runner/work/traces-sklearn/traces-sklearn/.pixi/envs/docs/bin/python: No module named pip

Note: you may need to restart the kernel to use updated packages.

Bagging estimator#

We see that increasing the depth of the tree leads to an over-fitted model. We can bypass choosing a specific depth by combining several trees together.

Let’s start by training several trees on slightly different data. We can generate slightly different datasets by randomly sampling with replacement. In statistics, we call this a bootstrap sample. We will use the iris dataset to create such an ensemble and keep some data for training and testing.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

X, y = load_iris(return_X_y=True)

X, y = X[:100], y[:100]

X_train, X_test, y_train, y_test = train_test_split(X, y, stratify=y)

Before training several decision trees, let’s run a single tree. Instead of training

this tree on X_train, we want to train it on a bootstrap sample. We can use the

np.random.choice function to sample indices with replacement. We need to create a

sample_weight vector and pass it to the fit method of the DecisionTreeClassifier.

We provide the generate_sample_weight function to generate the sample_weight array.

import numpy as np

def bootstrap_idx(X):

indices = np.random.choice(np.arange(X.shape[0]), size=X.shape[0], replace=True)

return indices

bootstrap_idx(X_train)

array([20, 69, 65, 74, 49, 31, 10, 38, 33, 24, 29, 40, 43, 50, 47, 54, 65,

66, 64, 53, 48, 1, 27, 57, 10, 27, 5, 7, 40, 39, 52, 18, 8, 59,

67, 70, 20, 70, 48, 19, 1, 54, 63, 27, 44, 62, 10, 49, 6, 48, 53,

24, 51, 12, 0, 2, 74, 2, 57, 45, 37, 27, 21, 8, 59, 59, 63, 65,

47, 24, 34, 0, 58, 25, 69])

from collections import Counter

Counter(bootstrap_idx(X_train))

Counter({np.int64(62): 4,

np.int64(39): 3,

np.int64(29): 3,

np.int64(1): 3,

np.int64(0): 3,

np.int64(58): 3,

np.int64(2): 3,

np.int64(20): 3,

np.int64(74): 2,

np.int64(47): 2,

np.int64(66): 2,

np.int64(59): 2,

np.int64(64): 2,

np.int64(9): 2,

np.int64(61): 2,

np.int64(12): 2,

np.int64(11): 2,

np.int64(3): 2,

np.int64(69): 2,

np.int64(73): 1,

np.int64(60): 1,

np.int64(36): 1,

np.int64(38): 1,

np.int64(57): 1,

np.int64(42): 1,

np.int64(41): 1,

np.int64(45): 1,

np.int64(7): 1,

np.int64(52): 1,

np.int64(27): 1,

np.int64(16): 1,

np.int64(44): 1,

np.int64(46): 1,

np.int64(63): 1,

np.int64(19): 1,

np.int64(18): 1,

np.int64(68): 1,

np.int64(26): 1,

np.int64(55): 1,

np.int64(25): 1,

np.int64(13): 1,

np.int64(48): 1,

np.int64(53): 1,

np.int64(32): 1,

np.int64(23): 1,

np.int64(50): 1,

np.int64(6): 1})

def bootstrap_sample(X, y):

indices = bootstrap_idx(X)

return X[indices], y[indices]

X_train_bootstrap, y_train_bootstrap = bootstrap_sample(X_train, y_train)

print(f"Classes distribution in the original data: {Counter(y_train)}")

print(f"Classes distribution in the bootstrap: {Counter(y_train_bootstrap)}")

Classes distribution in the original data: Counter({np.int64(1): 38, np.int64(0): 37})

Classes distribution in the bootstrap: Counter({np.int64(0): 41, np.int64(1): 34})

EXERCISE: Create a bagging classifier

A bagging classifier trains several decision tree classifiers, each on a different bootstrap sample.

Create several

DecisionTreeClassifierinstances and store them in a Python listLoop through these trees and

fitthem by generating a bootstrap sample using thebootstrap_samplefunctionTo predict with this ensemble on new data (testing set), provide the same set to each tree and call the

predictmethod. Aggregate all predictions in a NumPy arrayOnce you have the predictions, provide a single prediction by keeping the most predicted class (majority vote)

Check the accuracy of your model

# Write your code here.

EXERCISE: Using scikit-learn

After implementing your own bagging classifier, use scikit-learn’s BaggingClassifier

to fit the above data.

# Write your code here.

Note about the base estimator#

In the previous section, we used a decision tree as the base estimator in the bagging ensemble. However, this method accepts any kind of base estimator. We will compare two bagging models: one uses decision trees and another uses a linear model with a preprocessing step.

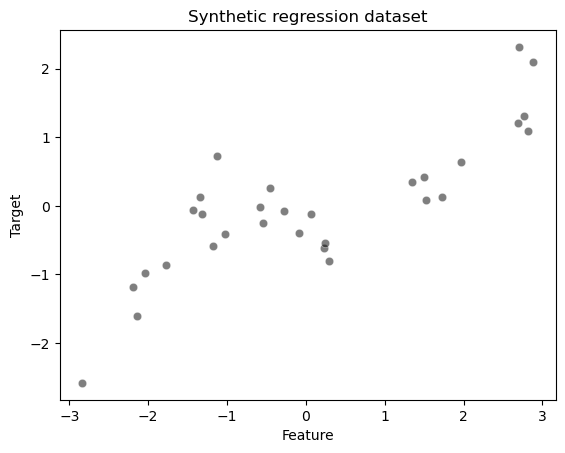

Let’s first create a synthetic regression dataset.

import pandas as pd

# Create a random number generator to set the randomness

rng = np.random.default_rng(1)

n_samples = 30

x_min, x_max = -3, 3

x = rng.uniform(x_min, x_max, size=n_samples)

noise = 4.0 * rng.normal(size=n_samples)

y = x**3 - 0.5 * (x + 1) ** 2 + noise

y /= y.std()

data_train = pd.DataFrame(x, columns=["Feature"])

data_test = pd.DataFrame(np.linspace(x_max, x_min, num=300), columns=["Feature"])

target_train = pd.Series(y, name="Target")

# Uncomment this line in JupyterLite

%pip install seaborn

import seaborn as sns

ax = sns.scatterplot(x=data_train["Feature"], y=target_train, color="black", alpha=0.5)

ax.set_title("Synthetic regression dataset")

plt.show()

/home/runner/work/traces-sklearn/traces-sklearn/.pixi/envs/docs/bin/python: No module named pip

Note: you may need to restart the kernel to use updated packages.

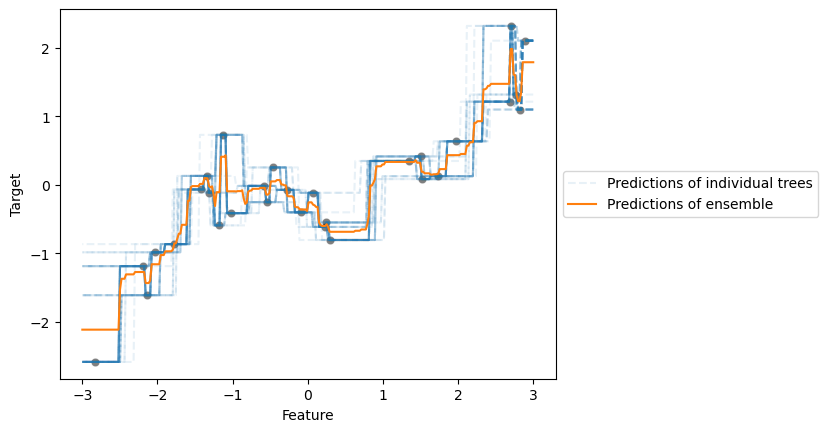

We will first train a BaggingRegressor where the base estimators are

DecisionTreeRegressor instances.

from sklearn.ensemble import BaggingRegressor

bagged_trees = BaggingRegressor(n_estimators=50, random_state=0)

bagged_trees.fit(data_train, target_train)

BaggingRegressor(n_estimators=50, random_state=0)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

BaggingRegressor(n_estimators=50, random_state=0)

We can make a plot showing the prediction from each individual tree and the averaged response from the bagging regressor.

for tree_idx, tree in enumerate(bagged_trees.estimators_):

label = "Predictions of individual trees" if tree_idx == 0 else None

tree_predictions = tree.predict(data_test.to_numpy())

plt.plot(

data_test,

tree_predictions,

linestyle="--",

alpha=0.1,

color="tab:blue",

label=label,

)

sns.scatterplot(x=data_train["Feature"], y=target_train, color="black", alpha=0.5)

bagged_trees_predictions = bagged_trees.predict(data_test)

plt.plot(

data_test,

bagged_trees_predictions,

color="tab:orange",

label="Predictions of ensemble",

)

plt.legend(loc="center left", bbox_to_anchor=(1, 0.5))

plt.show()

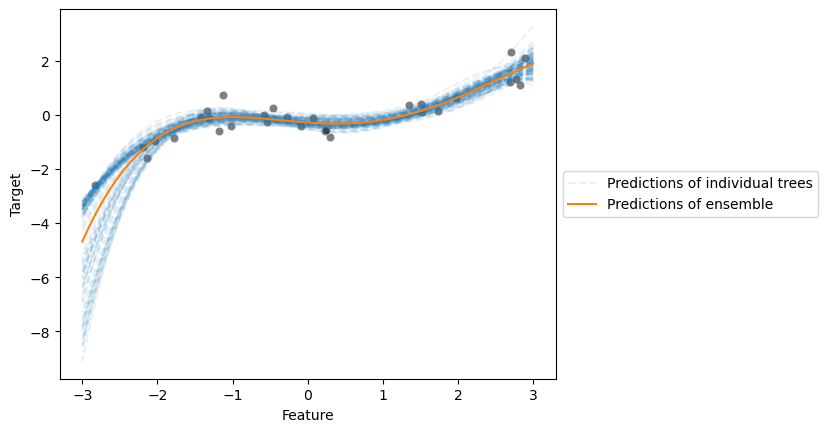

Now, we will show that we can use a model other than a decision tree. We will create

a model that uses PolynomialFeatures to augment features followed by a Ridge

linear model.

from sklearn.linear_model import Ridge

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import MinMaxScaler, PolynomialFeatures

polynomial_regressor = make_pipeline(

MinMaxScaler(),

PolynomialFeatures(degree=4),

Ridge(alpha=1e-10),

)

bagged_trees = BaggingRegressor(

n_estimators=100, estimator=polynomial_regressor, random_state=0

)

bagged_trees.fit(data_train, target_train)

for tree_idx, tree in enumerate(bagged_trees.estimators_):

label = "Predictions of individual trees" if tree_idx == 0 else None

tree_predictions = tree.predict(data_test.to_numpy())

plt.plot(

data_test,

tree_predictions,

linestyle="--",

alpha=0.1,

color="tab:blue",

label=label,

)

sns.scatterplot(x=data_train["Feature"], y=target_train, color="black", alpha=0.5)

bagged_trees_predictions = bagged_trees.predict(data_test)

plt.plot(

data_test,

bagged_trees_predictions,

color="tab:orange",

label="Predictions of ensemble",

)

plt.legend(loc="center left", bbox_to_anchor=(1, 0.5))

plt.show()

We observe that both base estimators can model our toy example effectively.

Random Forests#

Random forest classifier#

The random forest classifier is a popular variant of the bagging classifier. In addition to bootstrap sampling, random forest uses a random subset of features to find the best split.

EXERCISE: Create a random forest classifier

Use your previous code that generated several DecisionTreeClassifier instances.

Check the classifier options and modify the parameters to use only \(\sqrt{F}\) features

for splitting, where \(F\) represents the number of features in the dataset.

# Write your code here.

EXERCISE: Using scikit-learn

After implementing your own random forest classifier, use scikit-learn’s

RandomForestClassifier to fit the above data.

# Write your code here.

Random forest regressor#

EXERCISE:

Load the dataset from

sklearn.datasets.fetch_california_housingFit a

RandomForestRegressorwith default parametersFind the number of features used during training

Identify the differences between

BaggingRegressorandRandomForestRegressor

# Write your code here.

Hyperparameters#

The hyperparameters affecting the training process match those of decision trees.

Check the documentation for details. Since we work with a forest of trees, we have

an additional parameter n_estimators. Let’s examine how this parameter affects

performance using a validation curve.

# Uncomment this line in JupyterLite

%pip install pyodide-http

# import pyodide_http

# pyodide_http.patch_all()

/home/runner/work/traces-sklearn/traces-sklearn/.pixi/envs/docs/bin/python: No module named pip

Note: you may need to restart the kernel to use updated packages.

from sklearn.datasets import fetch_california_housing

X, y = fetch_california_housing(return_X_y=True, as_frame=True)

EXERCISE:

Compute train and test scores to analyze how the

n_estimatorsparameter affects performance. Define a range of values for this parameterPlot the train and test scores with confidence intervals

Consider: How does increasing the number of trees affect statistical performance? What trade-offs exist with computational performance?

# Write your code here.

You can also tune other parameters that control individual tree overfitting. Sometimes shallow trees suffice. However, random forests typically use deep trees since we want to overfit the learners on bootstrap samples - the ensemble combination mitigates this overfitting. Using shallow (underfitted) trees may lead to an underfitted forest.